Although I enjoy hiking through forests, I really ought to leave the camera at home: I might take dozens or a hundred photos and none of them are keepers. I ought to stick to architectural photos, for they say I'm good at those. Taking forest photos, for me, is a pointless, futile exercise. For example:

Yeah, it is green. A bad photo. And worst of all, for me, it doesn't look like how I remember seeing it. This was an awesome scene — even though it was an hour before sunset, this stretch of the trail was very dark, and quite mysterious. I pointed the camera, and this is the result. Not a keeper.

Now, I'm sure a creative digital artist could make something of this, but I'm not creative. I just like straightforward image corrections, according to the school of Dan Margulis. While perhaps I could just fiddle with the Adobe Camera RAW settings, or start applying curves in Photoshop, I would much rather start with more certain things.

We have two facts to work with: the scene was very dark, and the scene does not look like how I remember seeing it. Most specifically, the green foliage was not, to my eyes, a bright yellowish green, as seen here; the foliage was darker, yet distinct, maybe a bit bluish green.

Cameras are designed to approximate human vision in broad daylight. As lighting dims, human vision has special adaptations for seeing in the dark. This phenomenon is called the Purkinje effect, and cameras do not correct for this. We can expect that cameras will not record what we actually perceive in dim lighting.

Click to see my first article in this series: Photography in Low Light, part 1: The Purkinje Effect.

As light dims, the eye perceives blue things to be relatively brighter, while red things darken considerably; eventually, we can no longer see color, but just shades of gray. The scene above was very dim; I estimate that a sunny day is about 1800 times brighter! Certainly, the Purkinje effect was very active, and so perhaps there is a Purkinje correction that we can apply to improve the image.

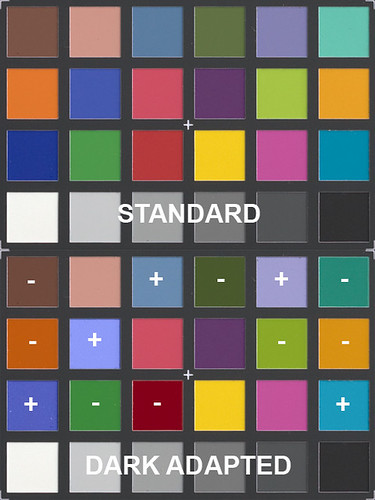

In my previous article, I naïvely assumed that boosting the luminance contribution of the blue channel to the image would approximate the Purkinje's effect, and while that seemed to work, it is not a good model of what happens in the eye. Here is my Purkinje correction, version 1:

- In Photoshop, duplicate the layer.

- Apply the Blue channel to the top layer, with Normal blending.

- Change blending of top layer to Luminosity

- Adjust opacity to taste.

Our eyes do an automatic white balance under many circumstances: if the lighting is bright and fairly uniform in color temperature, our eyes will quickly adapt to the conditions and neutral tones will appear neutral to us. When we photograph the same scene, we had better do a manual white balance, otherwise very strong color casts will result. As an experiment, set your camera to 'daylight' and photograph an interior scene, either lit by incandescent or fluorescent lighting, and see just how much correction is built into the eye! Or conversely, set your camera to 'incandescent' (tungsten is the same as incandescent) and see what it looks like under daylight.

The latter correction, using a tungsten white balance or tungsten film, and shooting outdoors while underexposing, is an old cinematographer's trick for simulating nighttime during the day. Now, I don't find that trick particularly convincing, but it ought to give us clues to what may be happening in the eye.

This is a shoddy special effect, and when I was a youth, I strongly rejected it. I don't like it now, but I am more tolerant of folks who make the most of limited resources — filming at night is more expensive than filming in the daytime, plus daylight shooting makes the cast and crew happier. But this exercise does tell us something: night vision is more blue and less red. (This photo was taken from the same trail, and is a view of the Mississippi River.)

So let's try this on my initial example image. In Adobe Camera Raw, I set the white balance to Tungsten and reduced the brightness a lot. We'll call this the Purkinje Correction, version 2.

Yes, it is dark, and perhaps would be better viewed on a dark background. The foliage is now much less yellow, which is closer to how I recall seeing the scene. But this still not a good image, and is unconvincing to me. A true Purkinje correction would shift some color tones around, and certainly would make reds darker and blues brighter, but the eye — I think — still attempts some white balancing.

I'm not really interested in taking a daytime photograph and making it appear as though it is night time, although that might be useful for other people. (The major flaw in this is the lack of bright artificial lighting in these photos, although I've seen some people actually paint-in lights in a rather convincing manner). Rather, I'm interested in making dim scenes look more as I remember seeing them.

I would like a photograph that implies a dark forest scene, while actually not being all that dark. I still want a photograph that can be easily viewed on a normal computer screen, while still representing the impression I had. Is this a realistic wish? Or not?

- This is not a realistic wish, because I do not have a full frequency spectrum for each pixel of the image: I only have three wide and overlapping average measurements of the spectra corresponding to the red, green, and blue channels. Were I to have this sort of thing, then the data is available to calculate the perceived color for most people under most lighting conditions, and this would theoretically solve my problem.

- We might be able to do OK given the data that we do have. We might not be able to measure the whole frequency spectrum of each pixel in the scene, but for a fact we can know what rough range of frequencies are not present in each pixel. Our results might not be perfect, but they may be plausible. I'll take this latter approach.

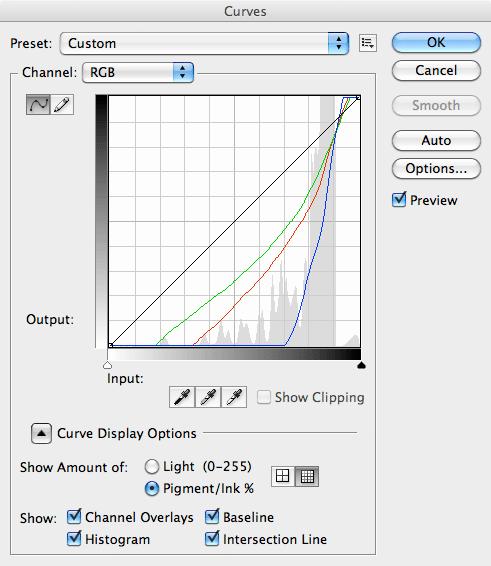

This makes a monochrome image that is strongly influenced by blue, and not at all influenced by red. I am more certain of the red setting than green or blue, however. I added this on top of my original image, in luminosity mode, added a bit of contrast, reduced opacity, and this is the result:

Not perfect, but I think it is more interesting and closer to what I remember seeing. This looks better than other methods I've tried.

This is the Purkinje correction, version 3. Next I’ll be interested in how white balance in the eye is changed by brightness.

UPDATE: Using the Channel Mixer tool in Photoshop, I was able to more closely mimic the eye’s color response in dim lighting due to the Purkinje Effect. See the article Examples of Color Mixing.